23 AprEpisode 146 | The Misbehavior of Organisms: The Brelands’ Impactful Article on “Instinctive Drift” (Plus: Reminder about this weekend’s special film screening with Bob Bailey!)

This weekend (Saturday April 24th at 4PM ET) we are hosting a screening of the short film Patient Like The Chipmunks, by Drs. Bob Bailey and Miriam Breland-Bailey, followed by a conversation and Q and A session with Dr. Bailey himself.

In advance of this event, Annie has recently read aloud some of Dr. Bailey's work. Today, she reads a famous essay written not by him, but by his business partners: the late Keller Breland and Marian Breland (who would later married Dr. Bailey, hence the hyphenated name). This article, The Misbehavior Of Organisms, first appeared in American Psychology in 1961, and was titled in response to BF Skinner's book, The Behavior Of Organisms. The Brelands had worked closely with Skinner as graduate students, and were the first to bring his laboratory work into the commercial realm. There, working with over 100 species of animals, they discovered that it just isn't always possible to operantly condition a behavior. The reason? Sometimes, an animal's baked-in instincts take over and can't easily be overcome.

Subscribe: iTunes

Mentioned in this episode:

School For The Dogs Professional Dog Trainer Course

Fill out the short survey on pet insurance and get a $5 coupon to storeforthedogs.com

Other episodes about Dr. Bailey:

Transcript:

Annie:

Hello humans. So I've posted a few Bonus episodes in the last few months that are me reading things relating to dog training and animal behavior, the science of behavior. Reading aloud short things that have impacted the way I think about behavior.

And I have read a couple of things in the last few weeks by Dr. Bob Bailey in anticipation of the screening and Q and A we are doing with Bob Bailey this weekend. You can still sign up at schoolforthedogs.com/Bailey. It is taking place this Saturday, April 24th, at 4:00 PM Eastern. We will be showing this rarely seen, really interesting short film by Bob Bailey called Patient like the Chipmunks. And he will then be joining us after that for a conversation with me and a Q and A.

Bob Bailey really is a Titan in the field of animal training. And this film talks about his rather extraordinary career, his business Animal Behavior Enterprises, which he started with his late wife, Marian Breland Bailey, and her late husband Keller Breland. And talks about sort of the history of operant conditioning from BF Skinner's lab through today.

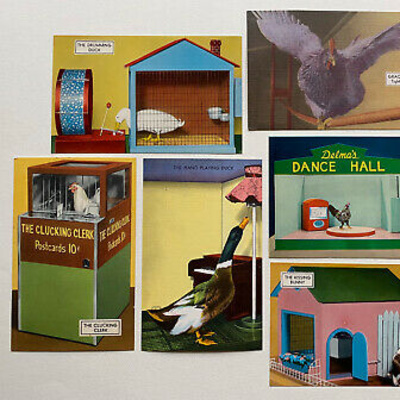

And it shows off some of the really entertaining and inspiring work that came out of Animal Behavior Enterprises, including their IQ Zoo, which was basically an amusement park they created of trained animals. It talks about some of their work with the military. Anyway, just really fascinating stuff.

So today I actually wanted to read a piece, an article that isn't by Bob Bailey. But he worked so closely with Marian Breland Bailey, his late wife, and her husband, her first husband Keller Breland. And they are the authors of this article. Marian and Keller started Animal Behavior Enterprises. And then Bob Bailey came on. And Keller Breland died quite young in 1965. He was only 50 years old, and Marian ended up later marrying Bob, which is why she is known as Marian Breland Bailey today. But when she authored this article with her then husband Keller Breland in 1961, she was just Marian Breland. So that's why she has multiple last names.

The article is called the misbehavior of organisms. Keller and Marian worked in BF Skinner's labs as graduate students when Skinner was at the University of Minnesota. And then basically took what they were learning, figuring out, codifying, in these laboratory environments and started working with literally hundreds of species of animals on a farm they bought in Arkansas in order to train animals mostly for commercial purposes.

They are often credited with being the first to use a clicker, and really did work with animals through their animal behavior enterprises that is unlike any other kind of reinforcement-based animal training I've ever seen. I don't think any individual or company has taken things to the wild and wonderful extremes that they did. And some of this is talked about in this wonderful little film.

This essay is titled after BF Skinner's The Behavior of Organisms, a book he published in 1938, which talks a lot about operant conditioning and how animals learn by consequence. And this essay is basically saying, Hey, they're there so much you can do to train animals using operant conditioning, but you still have to take into account the instincts an animal is born with.

This article has informed how I think about animal training in a huge way. However, I've always had one question about the conclusions that this article raises. And I'm hoping that maybe this weekend after the screening, I'll have a chance to ask this question to Bob Bailey if it is appropriate. I'll share my question with you at the end of the reading as a, I think it'll make a little bit more sense if you hear it then.

Two quick announcements. One, we have just opened up enrollment in our professional course, which is a fully virtual six month program designed to train people to become dog trainers. There are only four spots. Applications are due May 1st, although we are accepting applications on a rolling basis. So if the spots fill before then you may be out of luck. So make sure to get more information, fill out the application if you're interested all, at schoolforthedogs.com/professionalcourse.

The second thing is I have been putting together an episode all about pet insurance, and I am really curious to hear what you think about pet insurance. If you have pet insurance, or if you have had pet insurance, if you fill out the short survey I created at schoolforthedogs.com/petinsurance, you will get a $5 coupon to storeforthedogs.com. I would really love to hear your thoughts.

[intro and music]

This is The Misbehavior of Organisms published in American Psychologist in 1961, written by Keller Breland and Marian Breland of Animal Behavior Enterprises in Hot Springs, Arkansas:

There seems to be a continuing realization by psychologists that perhaps the white rat cannot reveal everything there is to know about animal behavior. Among the voices raised on this topic, Beach 1950 has emphasized the necessity of widening the range of species subjected to experimental techniques and conditions. However, psychologists as a whole do not seem to be heeding these admonitions as Waylon 1961 has pointed out.

Perhaps this reluctance is due in part in some dark pre-cognition of what they might find in such investigations. For the ethologists Laurens 1950 and Tinbergen 1951 have warned that if psychologists are to understand and predict the behavior of organisms, it is essential that they become thoroughly familiar with the instinctive behavior patterns of each new species they essay to study.

Of course, the Watsonian or Neo behavioristically oriented experimenter is apt to consider instinct an ugly word. He tends to class it with Hebs’ 1960 other seditious notions, which were discarded in the behavioristic revolution. And he may have some premonition that he will encounter this bete noir in extending the range of species and situations studied.

We can assure him that his apprehensions are well grounded. In our attempt to extend a behavioristically oriented approach to the engineering control of animal behavior by operant conditioning techniques, we have found a running battle with the seditious notion of instinct. It might be of some interest to the psychologist to know how the battle is going, and to learn something about the nature of the adversary he is likely to meet if, and when he tackles new species in new learning situations.

Our first report, Breland and Breland 1951 in the American Psychologist concerning our experiences in controlling animal behavior, was wholly affirmative and optimistic, saying in essence that the principles derived from the laboratory could be applied to the extensive control of behavior under non laboratory conditions throughout a considerable segment of the phylogenetic scale.

When we began this work, it was our aim to see if the science would work beyond the laboratory to determine if animal psychology could stand on its own feet as an engineering discipline. These aims have been realized. We have controlled a wide range of animal behavior and have made use of the great popular appeal of animals to make it an economically feasible project.

Conditioned behavior has been exhibited at various municipal zoos and museums of natural history, and has been used for department store displays for fair and trade convention exhibits, for entertainment at tourist attractions, on television shows and in the production of television commercials. 38 species totaling over 6,000 individual animals have been conditioned. And we have dared to tackle such unlikely subjects as reindeer, cockatoos, raccoons, porpoises, and whales.

Emboldened by this consistent reinforcement, we have ventured further and further from the security of the Skinner box. However, in this cavalier extrapolation, we have run afoul of a persistent pattern of discomforting failures. These failures, although disconcertingly frequent and seemingly diverse, fall into a very interesting pattern. They all represent breakdowns of conditioned operant behavior. From a great number of such experiences, we have selected more or less at random, the following examples.

The first instance of our discomfiture might be entitled, What makes Sammy dance? In the exhibit in which this occurred, the casual observer sees a grown Bantam chicken emerge from a retaining compartment when the door automatically opens. The chicken walks over about three feet, pulls a rubber loop on a small box, which starts a repeated auditory stimulus pattern, a four note tune.

The chicken then steps up onto an 18 inch, slightly raised disc, thereby closing a timer switch, and scratches vigorously round and round over the disc for 15 seconds at the rate of about two scratches per second, until the automatic feeder fires in the retaining compartment. The chicken goes into the compartment to eat thereby automatically shutting the door.

The popular interpretation of this behavior pattern is that the chicken has turned on the jukebox and dances. The development of this behavioral exhibit was wholly unplanned. In the attempt to create quite another type of demonstration which required a chicken simply to stand on a platform for 12 to 15 seconds, we found that over 50% developed a very strong and pronounced scratch pattern, which tended to increase in persistence as the time interval was lengthened. Another 25% or so developed other behaviors, pecking at spots, et cetera.

However, we were able to change our plan so as to make use of the scratch pattern, and the result was the dancing chicken exhibit described above. In this exhibit, the only real contingency for reinforcement is that the chicken must depress the platform for 15 seconds. In the course of a performing day, about three hours for each chicken, a chicken may turn out over 10,000 unnecessarily virtual identical responses.

Operant behaviors would probably have little hesitancy in labeling this as an example of Skinnerian superstition, Skinner 1948, or mediating behavior, and we list it first to whet their explanatory appetite. However, a second instance involving a raccoon does not fit so neatly into this paradigm.

The response concerned the manipulation of money by the raccoon who has hands rather similar to those of the primates. The contingency for reinforcement was picking up the coins and depositing them in a five inch metal box. Raccoons condition readily, have good appetites, and this one was quite tame and an eager subject. We anticipated no trouble.

Conditioning him to pick up the first coin was simple. We started out by reinforcing him for picking up a single coin. Then the metal container was introduced with the requirement that he dropped the coin into the container. Here we ran into the first bit of difficulty. He seemed to have a great deal of trouble letting go of the coin. He would rub it up against the inside of the container, pull it back out and clutch it firmly for several seconds. However, he would finally turn it loose and receive his food reinforcement.

Then the final contingency. We put him on a ratio of two requiring that he pick up both coins and put them in the container. Now the raccoon really had problems. And so did we. Not only could he not let go of the coins, but he spent seconds, even minutes, rubbing them together in a most miserly fashion and dipping them into the container.

He carried on this behavior to such an extent that the practical application we had in mind, a display featuring a raccoon putting money in a piggybank simply was not feasible. The rubbing behavior became worse and worse as time went on in spite of non reinforcement.

For the third instance, we returned to the gallinaceous birds. The observer sees a hopper full of oval plastic capsules, which contains small toys, charms and the like. When the SD, [discriminative stimulus, that stands for] a light, is presented to the chicken, she pulls a rubber loop, which releases one of these capsules onto a slide about 16 inches long inclined at about 30 degrees. The capsule rolls down the slide and comes to rest near the end. Here, one or two sharp, straight packs by the chicken will knock it forward off the side and out to the observer. And the chicken is then reinforced by an automatic feeder.

This is all very well. Most chickens are able to master these contingencies in short order. The loop pulling presents no problems. She then has only to peck the capsule off the slide to get her reinforcement. However, a good 20% of all chickens tried on this set of contingencies failed to make the grade. After they pecked a few off the slide, they begin to grab at the capsules and drag them backwards into the cage. Here, they pound them up and down on the floor of the cage.

Of course this results in no reinforcement for the chicken. And yet some chickens will pull in over half of the capsules presented to them. Almost always, this problem behavior does not appear until after the capsules begin to move down the slide. Conditioning is begun with the stationary capsules placed by the experimenter. When the pecking behavior becomes strong enough so that the chicken is knocking them off the slide and getting reinforced consistently, the loop pulling is conditioned to the light. The capsules then come down the side to the chicken. Here, most chickens who before did not have this tendency will start grabbing and shaking.

The fourth incident also come also concerns a chicken. Here, the observer sees a chicken in a cage about four feet long, which is placed alongside a miniature baseball field. The reason for the cage is the interesting part. At one end of the cage is an automatic electric feed hopper. At the other is an opening through which the chicken can reach and pull a loop on a bat.

If she pulls the loop hard enough, the bat, solenoid operated, will swing knocking a small baseball up the playing field. If it gets past the miniatures toy players on the field and hits the back fence, the chicken is automatically reinforced with food at the other end of the cage. If it does not go far enough or hits one of the players, she tries again. This results in behavior on an irregular ratio. When the feeder sounds, she then runs down the length of the cage and eats.

Our problems began when we tried to remove the cage for photography. Chickens that had been well conditioned to this behavior became wildly excited when the ball started to move. They would jump up on the playing field, chase the ball all over the field, even knock it off on the floor and chase it around, pecking it in every direction, although they had never had access to the ball before. This behavior, it was so persistent and so disruptive in spite of the fact that it was never reinforced that we had to reinstate the cage.

The last instance we shall relate in detail is one of the most annoying and baffling for a good behaviorist. Here a pig was conditioned to pick up large wooden coins and deposit them in a large piggy bank. The coins were placed several feet from the bank and the pig required to carry them to the bank and deposit them, usually four or five coins for one reinforcement. Of course, we started out with one coin near the bank.

Pigs condition very rapidly. They have no trouble taking ratios. They have ravenous appetites, naturally, and in many ways are among the most tractable animals we have worked with. However, this particular problem behavior developed in pig after pig, usually after a period of weeks or months getting worse every day.

At first, the pig would eagerly pick up $1, carry it to the bank, run back and get another, carry it rapidly and neatly, and so on until the ratio was complete. Thereafter, over a period of weeks, the behavior would become slower and slower. He might run over eagerly for each dollar, but on the way back, instead of carrying the dollar and depositing it simply and cleanly, he would repeatedly drop it, root it, drop it again, root it along the way, pick it up, toss it up in the air, root it some more. And so on.

We thought this behavior might simply be the dilly dallying of an animal on a low drive. However, the behavior persisted and gained in strength in spite of a severely increased drive. He finally went through the ratio so slowly that he did not get enough to eat in the course of a day. Finally, it would take the pig about 10 minutes to transport four coins, a distance of about six feet. This problem behavior developed repeatedly in successive pigs.

There have also been other instances. Hamsters that stopped working in a glass case after four or five reinforcements. Porpoises and whales that swallow their manipulanda — manipulanda? don’t know that word — balls and inner tubes. Cats that will not leave the area of the feeder, rabbits that will not go to the feeder. The great difficulty in many species of conditioning vocalization with food reinforcement, problems in conditioning a kick in a cow, the failure to get appreciably increased effort out of ungulates with increased drive, and so on.

These, we shall not dwell on in detail nor shall we discuss how they might be overcome. These egregious failures came as a rather considerable shock to us for there was nothing in our background in behaviorism to prepare us for such gross inability to predict and control the behavior of animals with which we had been working for years.

The examples listed we feel represented clear and utter failure of conditioning theory. They are far from what one would normally expect on the basis of the theory alone. Furthermore, they are definite. Observable. The diagnosis of theory failure does not depend on subtle statistical interpretations or on semantic legerdemain. The animal simply does not do what he has been conditioned to do.

It seems perfectly clear that with the possible exception of the dancing chicken, which could conceivably, as we have said, be explained in terms of Skinner superstition paradigm, the other instances do not fit the behavioristic way of thinking. Here we have animals after having been conditioned to a specific learned response, gradually drifting into behaviors that are entirely different from those which were conditioned.

Moreover, it can easily be seen that these particular behaviors to which the animals drift are clear cut examples of instinctive behaviors having to do with the natural food-getting behaviors of the particular species. The dancing chicken is exhibiting the gallinaceous bird’s scratch pattern that in nature often precedes ingestion. The chicken that hammers capsules is obviously exhibiting instinctive behavior having to do with breaking open of seed pods or the killing of insects, grubs, et cetera.

The raccoon is demonstrating so-called washing behavior. The rubbing and washing response may result, for example, in the removal of the exoskeleton exoskeleton of a crayfish. The pig is rooting or shaking, behaviors which are strongly built into this species and are connected with the food-getting repertoire.

These patterns to which the animals drift require great physical output, and therefore are a violation of the so-called law of least effort. And, most damaging of all, they stretch out the time required for reinforcement when nothing in the experimental setup requires them to do so. They have only to do the little tidbit of behavior to which they were conditioned. For example, pick up the coin and put it in the container to get reinforced immediately. Instead they drag the process out for a matter of minutes when there is nothing in the contingency which forces them to do this. Furthermore, increasing the drive, merely intensifies this effect.

It seems obvious that these animals are trapped by strong instinctive behaviors. And clearly we have here a demonstration of the pre potency of such behavior patterns over those which have been conditioned. We have termed this phenomenon instinctive drift.

The general principle seems to be that wherever an animal has strong instinctive behaviors in the area of the conditioned response, after continued running, the organism will drift around the instinctive behavior to the detriment of the conditioned behavior, and even to the delay or preclusion of the reinforcement. In a very boiled down simplified form, it might be stated as learned behavior drifts toward instinctive behavior.

All this of course is not to disparage the use of conditioning techniques, but as intended as a demonstration that there are definite weaknesses in the philosophy underlying these techniques. The pointing out of such weaknesses should make possible a worthwhile revision in behavior theory.

The notion of instinct has now become one of the basic concepts in an effort to make sense of the Welter of observations which confront us. When behaviorism tossed out instinct, it is our feeling that some of its power of prediction and control were lost with it. From the foregoing examples, it appears that although it was easy to banish the instinctists from the science during the behavioristic revolution, it was not possible to banish instinct so easily.

And if, as Heb suggests, it is advisable to reconsider those things that behaviorism explicitly threw out, perhaps it might likewise be advisable to examine what they tacitly brought in the hidden assumptions which led most disastrously to these breakdowns in the theory.

Three of the most important of these tacit assumptions seem to us to be that the animal comes to the laboratory as a virtual tabula rasa, that species differences are insignificant, and that all responses are about equally conditionable to all stimuli. It is obvious, we feel from the foregoing account, that these assumptions are no longer tenable.

After 14 years of continuous conditioning and observation of thousands of animals, it is our reluctant conclusion that the behavior of any species cannot be adequately understood, predicted or controlled without knowledge of its instinctive patterns, evolutionary history and ecological niche. In spite of our early successes with the application of behavioristic tickly oriented conditioning theory, we readily admit now that ethological facts and attitudes in recent years have done more to advance our practical control of animal behavior than recent reports from American “learning labs.”

Moreover, as we have recently discovered, if one begins with evolution and instinct as the basic format for the science, a very illuminating viewpoint can be developed, which leads naturally to a drastically revised and simplified conceptual framework of startling explanatory power to be reported elsewhere. It is hoped that this playback on the theory will be behavioral technologies’ partial repayment to the academic science whose impeccable empiricism we may have used so extensively.

So here is what I would like to know about this essay. And I'm hoping maybe I can ask this question to Bob Bailey. Actually it's maybe it's kind of two questions. Anyway, the first time I read this essay, my takeaway from it was that classical conditioning is so strong or so powerful that animals create associations, can create associations with objects that lead them to treat the object as if it were the thing with which they are associating it with. As if it is the primary reinforcer.

So the pig has enough experience getting food for putting his coin in the piggy bank that eventually he starts engaging in instinctual behaviors, built-in behaviors that relate to the way in which he would be treating actual food. But in rereading the essay, I realize that maybe is a conclusion that I'm making that isn't actually in the text, or maybe I'm just extrapolating, and I'm not sure if that is correct.

Because classical conditioning is actually never mentioned in the essay. So maybe I'm just making assumptions. And if that assumption is correct, then what would happen if instead of using food as a primary reinforcer, you used something else, like sex, for example? That might sound silly, but there is animal training that is done where the reward for the behavior is access to an opposite sex member of the same species. I've specifically heard of this done with dolphins, where the reward is letting them into a pen, letting the boy dolphins into the pen with the girl dolphins.

If sex were the primary reinforcer instead of food, would the animals that the Brelands are writing about have engaged in sexual instinctive behaviors with the objects in question? Or is it just a matter of them being engaged with these objects in a given environment, repeatedly, for a specific amount of time that has resulted in them treating the objects like food? Does it have to do with the fact that they're working with objects that are maybe the size and shape of food they might encounter?

Not sure how I can succinctly put all that into one question, because now that I've said it all out loud, it feels like there's a lot of stuff there, but that's basically my question. I guess I have a few days to figure out how to say it more clearly, but. Are they treating these secondary reinforcers, these conditioned reinforcers, like food because of all the associations they've made with these objects having to do with food?

If a different reinforcer, primary reinforcer, was used would their engagement with the objects relate to that different primary reinforcer, and does training have anything to do with it, or is it just the fact that they're engaging with these objects at all?

The latter question is something I've thought about more recently watching my daughter, who seems to me to have strong caring instincts Jack Ponskeep whose name I'm probably saying wrong. But Dr. Jock ponds keep talks in his work about the emotional brain and the things that drive behavior, being seven things: seeking, fear, rage, lust, care, panic/grief and play. And she can make like a tiny baby to care for out of pretty much anything.

Like I think if I took a Sharpie and put two eyes on a roll of toilet paper, she'd pretty much want to tuck it into bed and read it to a good night story. Now, I've never tried to train her to do anything specific with a roll of toilet paper that has eyes on it. But I could see that, were I to try to do that, I could imagine that the instinct to take care of it like a baby might overpower whatever it is I was trying to get her to do with it.

But the instinct is there, even if I am not encouraging her to engage with that object in any specific way. And I wonder the same thing about the pigs. If the pigs were just in an environment with those coins, would they start spontaneously treating those coins like food because they were present?

Now, before I re-read this essay recently. And I, I had stuck in my head that it was about what I'm now not so sure of, but that it was about animals treating objects that they've been conditioned to be interested in as if those objects were the primary reinforcer. I thought about that a lot as it relates to people and money.

I started to think about how people are so deeply conditioned to associate primary reinforcers, the stuff we really need in life, with money, that it seems like they sometimes treat money as if it were the primary reinforcer. At least in terms of the way people hoard it, guard it, stockpile it. I mean, people don't usually eat money or want to have sex with money.

But I dunno, the way people sometimes show off what their money can buy in ways that are conspicuous. Maybe it has to do with an instinct we have to show off our resources. Like the peacock showing off his feathers to show his virility, good health, et cetera. We show off fancy cars or conspicuously expensive jewelry as a way to flaunt the fact that we have extra resources because of conditioned associations that are so strong and the fact that it's easier than showing how full your refrigerator is. These conditioned reinforcers being easier to show than the primary reinforcers.

Anyway, I hope this article has sparked interested if perhaps complicated thoughts about behavior in your mind as well. And I hope you'll join me for this screening and a conversation with Bob Bailey tomorrow. Sign up at schoolforthedogs.com/Bailey.

[outro and music]